Authors:

(1) Vladislav Trifonov, Skoltech ([email protected]);

(2) Alexander Rudikov, AIRI, Skoltech;

(3) Oleg Iliev, Fraunhofer ITWM;

(4) Ivan Oseledets, AIRI, Skoltech;

(5) Ekaterina Muravleva, Skoltech.

Table of Links

2 Neural design of preconditioner

3 Learn correction for ILU and 3.1 Graph neural network with preserving sparsity pattern

5.2 Comparison with classical preconditioners

5.4 Generalization to different grids and datasets

7 Conclusion and further work, and References

Abstract

Large linear systems are ubiquitous in modern computational science. The main recipe for solving them is iterative solvers with well-designed preconditioners. Deep learning models may be used to precondition residuals during iteration of such linear solvers as the conjugate gradient (CG) method. Neural network models require an enormous number of parameters to approximate well in this setup. Another approach is to take advantage of small graph neural networks (GNNs) to construct preconditioners of the predefined sparsity pattern. In our work, we recall well-established preconditioners from linear algebra and use them as a starting point for training the GNN. Numerical experiments demonstrate that our approach outperforms both classical methods and neural network-based preconditioning. We also provide a heuristic justification for the loss function used and validate our approach on complex datasets.

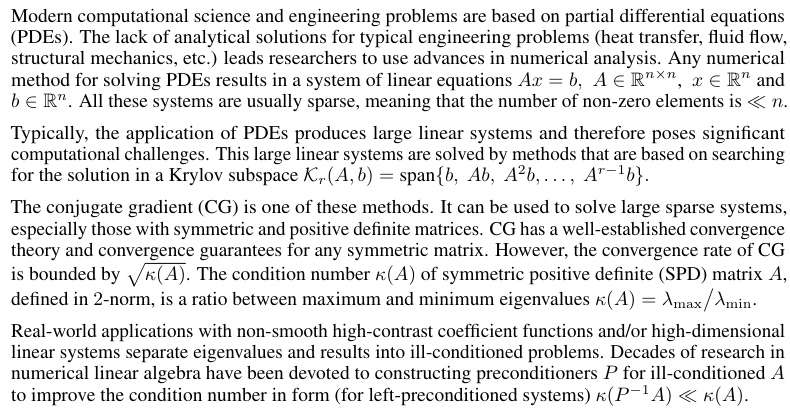

1 Introduction

The well-designed preconditioner should tend to approximate A, be easily invertible and be sparse. The construction of a preconditioner is typically a trade-off between the quality of the approximation and the cost of storage/inversion of the preconditioner Saad [2003].

Recent papers on the application of neural networks to speed up iterative solvers include usage of neural operators as nonlinear preconditioner functions Rudikov et al. [2024], Shpakovych [2023] or within a hybrid approach to address low-frequencies Kopanicáková and Karniadakis [2024], Cui ˇ et al. [2022] and learning preconditioner decomposition with graph neural networks (GNN) Li et al. [2023], Häusner et al. [2023].

We suggest a GNN-based construction of preconditioners that produce better preconditioners than their classical analogous. Our contributions are as follows:

• We propose a novel scheme for preconditioner design based on learning correction for well-established preconditioners from linear algebra with the GNN.

• We suggest a novel understanding of the loss function used with accent on low-frequencies and provide experimental justification for the understanding of learning with such.

• We propose a novel approach for dataset generation with a measurable complexity metric that addresses real-world problems.

• We provide extensive studies with varying matrix sizes and dataset complexities to demonstrate the superiority of the proposed approach and loss function over classical preconditioners.

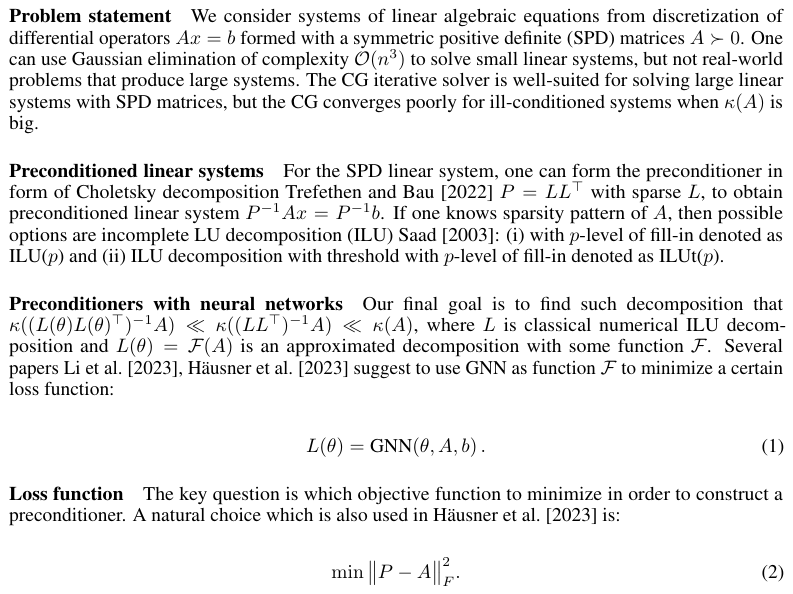

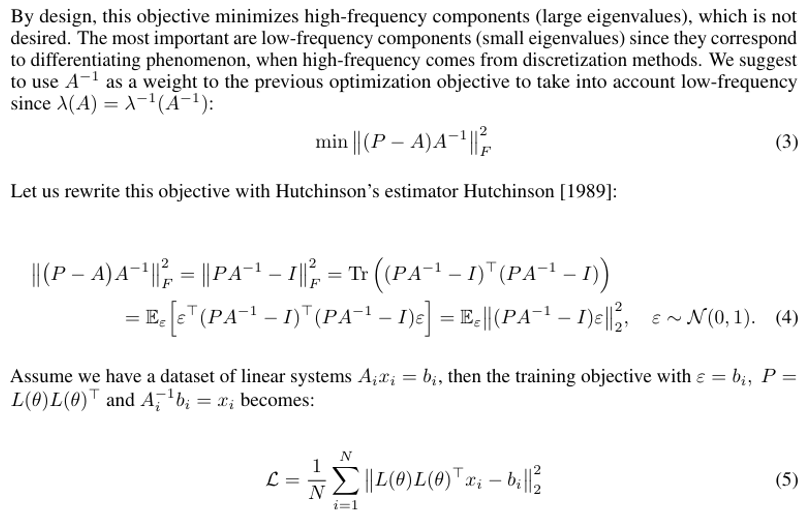

2 Neural design of preconditioner

This loss function previously appeared in related research Li et al. [2023] but with understanding of inductive bias from PDE data distribution. In experiment section we evidence our hypothesis, that loss (5) indeed mitigate low-frequency components.

3 Learn correction for ILU

Our main goal is to construct preconditioners that will reduce condition number of a SPD matrix greater, than classical preconditioners with the same sparisy pattern. We work with SPD matrices so ILU, ILU(p) and ILUt(p) results in incomplete Choletsky factorization IC, IC(p) and ICt(p)

3.1 Graph neural network with preserving sparsity pattern

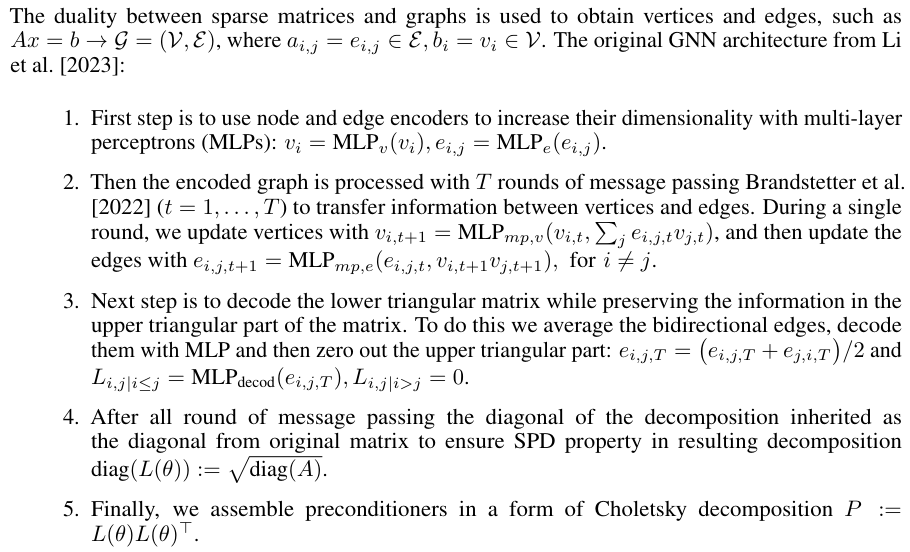

Following the idea from Li et al. [2023], we use of GNN architecture Zhou et al. [2020] to preserve the sparsity pattern and predict the lower triangular matrix to create a preconditioner in a form of IC decomposition.

This paper is available on arxiv under CC by 4.0 Deed (Attribution 4.0 International) license.